ADAM — Layer 1: Delivery

How AI capability becomes part of real work

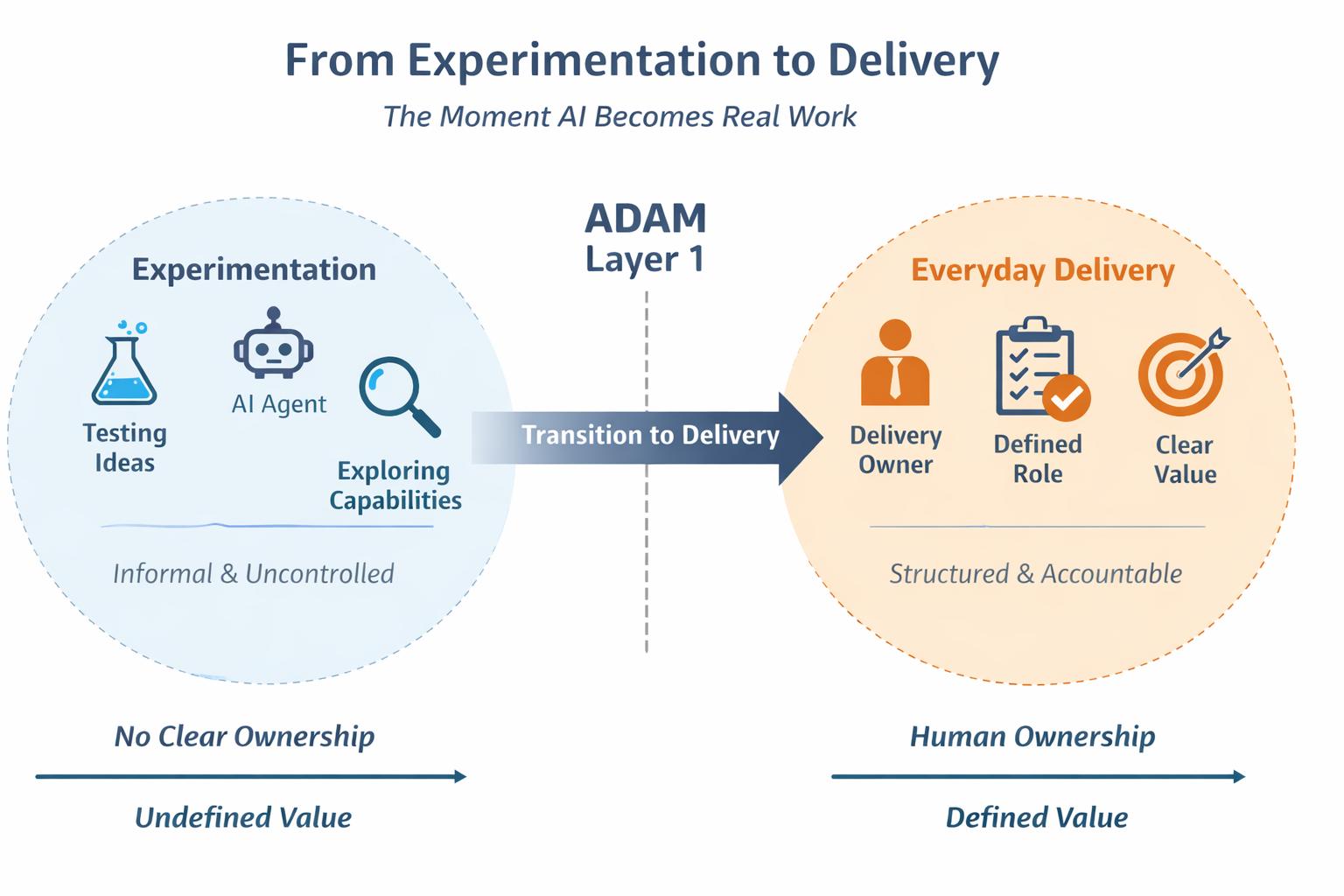

Most AI problems do not begin with autonomy or governance failures. They begin quietly, at the moment AI enters delivery. A model exists, a capability is tested, and then it is used by a team to complete real work. This moment is often treated as operational, not strategic, which is why its risks are underestimated. Layer 1 exists to govern that transition carefully and deliberately.

There is a difference between AI existing and AI participating in delivery. Existence is passive; participation is consequential. Once AI is part of delivery, it influences outcomes, affects accountability, and shapes how value is created. If that transition is unmanaged, ambiguity enters the system and remains hidden until it becomes costly.

Layer 1 exists before any other ADAM layer because it defines the moment accountability begins to shift. Without a clear entry into delivery, governance becomes reactionary and evolution becomes political. This layer is about clarity at the earliest possible point, so that everything that follows can remain coherent.

Why Layer 1 exists

AI often enters organisations informally. A team finds a helpful tool, a prototype solves a small problem, and the improvement feels harmless. Early gains are real, but they can mask ambiguity: who is responsible, what outcomes are expected, and whether the change is sustainable. That ambiguity is not created in a single decision. It accumulates slowly, as AI becomes part of everyday work without a clear moment of definition.

The erosion of accountability is gradual. It looks like convenience. It looks like speed. It looks like adoption. Only later does it reveal itself as uncertainty when things do not work as expected. Layer 1 exists to prevent that slow drift by giving teams a shared moment to name what is changing and who remains accountable.

The foundational rule of Layer 1

Delivery ownership always remains human. This rule is introduced slowly because its meaning is easy to misunderstand. Accountability here does not mean doing every task personally. It means owning outcomes, intent, and direction. A team may rely on an agent, but the responsibility for what is delivered remains with a named person.

The rule exists because delivery is more than output. It includes judgment, context, and trust. Tools can assist, but they cannot be accountable. When this rule is ignored, teams lose clarity about who stands behind a decision. The result is not freedom; it is confusion. Layer 1 protects the difference between assistance and responsibility.

What Layer 1 actually is (and is not)

Layer 1 is not a gate, an approval board, or a new burden of governance. It is a moment of shared definition. It exists so that AI is understandable before it is relied upon, and so that the organisation can say what is being introduced without hesitation.

In practice, Layer 1 feels like clarity at the point of entry. It is a short, explicit alignment that makes an agent’s role visible, bounded, and accountable before it becomes normalised.

When Layer 1 is applied

Layer 1 is applied whenever AI is introduced into delivery, regardless of how small the change appears. Context matters more than capability. A reused agent is not automatically safe because it has been used elsewhere. The delivery context changes risk, expectations, and accountability. Each time AI participates in a different stream of work, the entry moment must be re-evaluated in that context.

Understanding roles through responsibility

Roles and Responsibilities in AI-Enabled Delivery

| Role | Role Definition | Responsible For (Outputs, Actions & Artefacts) |

|---|---|---|

| Agent Sponsor | The person who explains why an agent is needed and what problem it exists to solve. | Business rationale, problem statement, success intent, alignment to outcomes, continued relevance of the agent. |

| Delivery Owner (FTE) | The human who owns the outcome of the work, regardless of how much is delegated to agents. | Final outcomes, decisions, approvals, acceptance of results, accountability when things go wrong. |

| Agent (FTA) | An AI agent that executes tasks within clearly defined boundaries but holds no authority or accountability. | Task execution, data processing, generation of outputs, recommendations, actions within agreed limits. |

| ADAM Delivery Steward | The role that protects clarity at the point AI enters delivery and ensures responsibilities are explicit. | Role clarity, boundary definition, entry-into-delivery checks, documentation of ownership, escalation paths. |

| AI / Platform Engineer | The technical role that builds, integrates, and maintains the underlying AI capability. | Agent configuration, integrations, performance tuning, reliability, technical documentation. |

| Domain / Business Lead | The role accountable for value within a business area where AI is applied. | Value realisation, prioritisation, resourcing decisions, alignment to domain objectives. |

Agents contribute capability. Humans retain accountability.

Layer 1 separates intent, accountability, execution, and stewardship to keep delivery understandable. This separation is not about hierarchy; it is about clarity. Each role exists because a different kind of responsibility must be made explicit before AI can be trusted in delivery.

The Agent Sponsor owns the “why.” This role makes the case for introducing an agent and anchors it to a real need. Without a sponsor, the agent has no narrative, only activity. Sponsorship ensures intent is not diluted as the work progresses.

The Delivery Owner (FTE) is the most important role. This person owns the outcome. Layer 1 cannot proceed without a named Delivery Owner because accountability cannot be shared with a system. The Delivery Owner is the human whose judgment carries the weight of the result.

The Agent (FTA) is capability, not authority. It executes within defined boundaries and contributes to delivery, but it does not own intent or consequences. Treating agents as capability keeps responsibility where it belongs and prevents authority from being assumed.

The ADAM Delivery Steward exists to protect clarity, not power. This role ensures the entry moment is real, that ownership is explicit, and that nothing is left undefined. Stewardship keeps the system honest without becoming a barrier.

The decisions Layer 1 forces into the open

Layer 1 exists because unmade decisions cause the most damage. When decisions remain implicit, accountability becomes fuzzy and governance becomes reactive. Clarity at the entry point prevents conflict later because it makes decisions visible while the cost of correction is still low.

The questions here are not administrative. They are foundational: why this agent, what outcomes it influences, how success will be judged, and who will stand behind the result. Layer 1 makes these decisions explicit so that trust and adoption can be earned, not assumed.

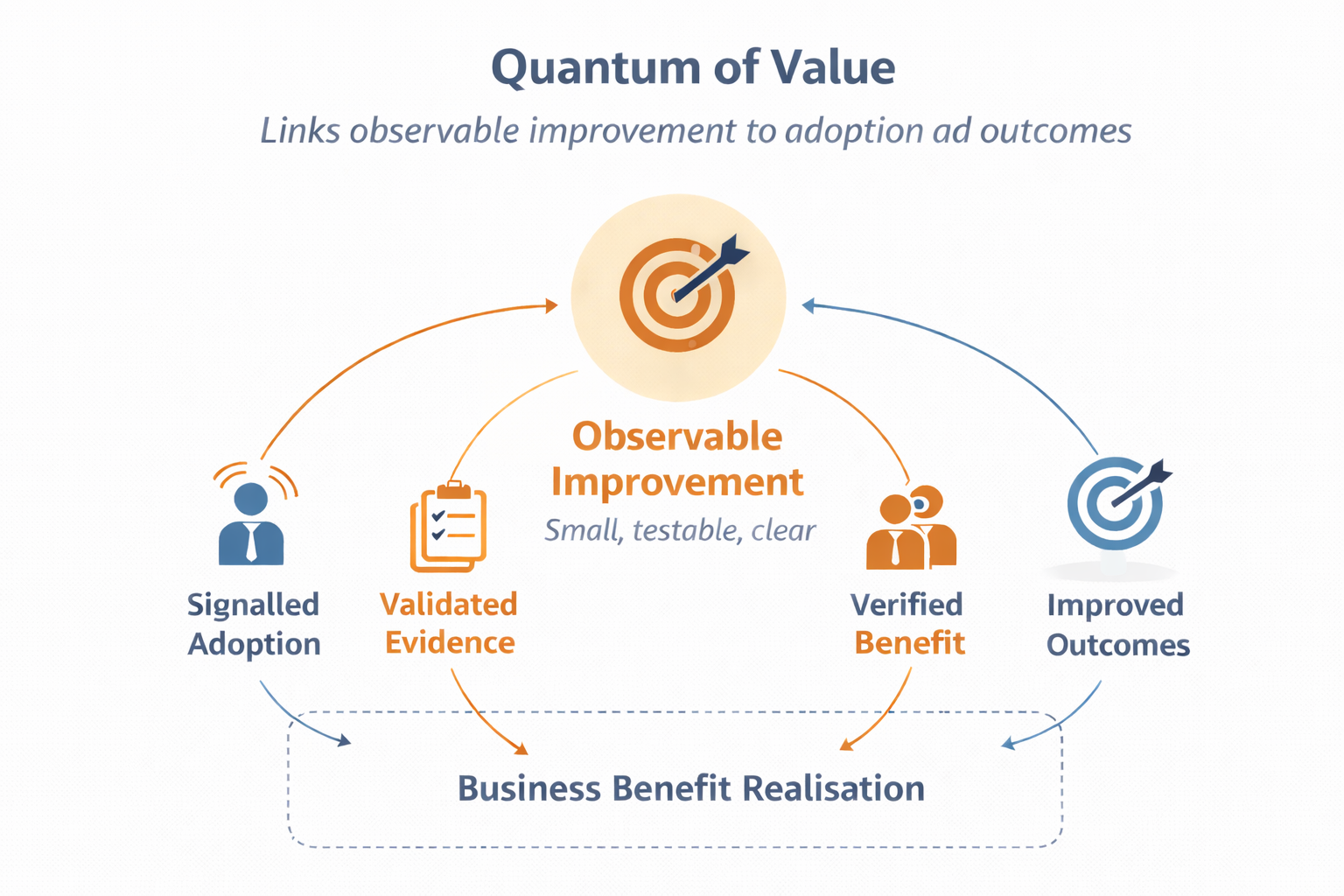

Defining value before capability

Abstract value fails because it cannot be tested. Layer 1 introduces the concept of quanta of value to keep improvement observable. A quantum of value is the smallest meaningful unit of improvement that can be validated in real work. It connects what the agent does to outcomes that teams can see, adopt, and measure.

Validation is different from aspiration. A claim of value is not enough; it must be demonstrated through real usage and clear outcomes. This is why Layer 1 insists that capability only matters once it can be tied to observable improvement.

Making AI visible in delivery

Silent automation feels efficient, but it creates fragility. When AI operates invisibly, teams cannot assess its impact, understand its limits, or build trust in its outcomes. Layer 1 makes AI visible in delivery so that review and pause mechanisms exist before they are needed.

Visibility does not slow delivery; it protects it. It creates an operating environment where teams can learn, adjust, and intervene without crisis. This is how confidence is built in practice.

Autonomy at entry (and why it starts low)

Autonomy is a spectrum and a variable. Layer 1 defines the starting position, not the destination. Most agents begin at low autonomy because trust has not yet been earned and validation is still in progress. Autonomy expands only when evidence and adoption justify it, and Layer 1 ensures the initial position is clear.

The entry into delivery moment

This is a deliberate moment. It is the point where AI stops being an experiment and becomes part of delivery. At this moment, what must be true is made explicit: ownership, value, accountability, and the boundaries of use. It is not an approval ritual. It is a shared acknowledgement that the organisation understands what is changing.

When this moment is handled well, it accelerates future progress. Teams can build on a stable foundation rather than renegotiating intent and accountability later.

What success looks like

When Layer 1 is working, the organisation feels steady. Teams are confident about who is accountable. Trust grows because reversibility is understood. Governance later in the lifecycle becomes lighter because it builds on earlier clarity. The introduction of AI feels deliberate rather than improvised.

Relationship to the rest of ADAM

Layer 1 is foundational. If it is skipped, Layer 2 becomes heavier because governance must retrofit clarity that was never established. Layer 3 becomes political because evolution lacks agreed direction. By contrast, when Layer 1 is done well, the rest of ADAM feels natural and proportional.

Closing

Layer 1 is about clarity, safety, and trust. It is not about control now; it is about speed later. It ensures AI enters delivery in a way that teams can explain, own, and improve over time.