Humans & Agents

People and AI creating value in harmony

AI is often described in extremes. Either as something that will replace people — or as something that should never be trusted. Both views miss the reality of how value is actually created.

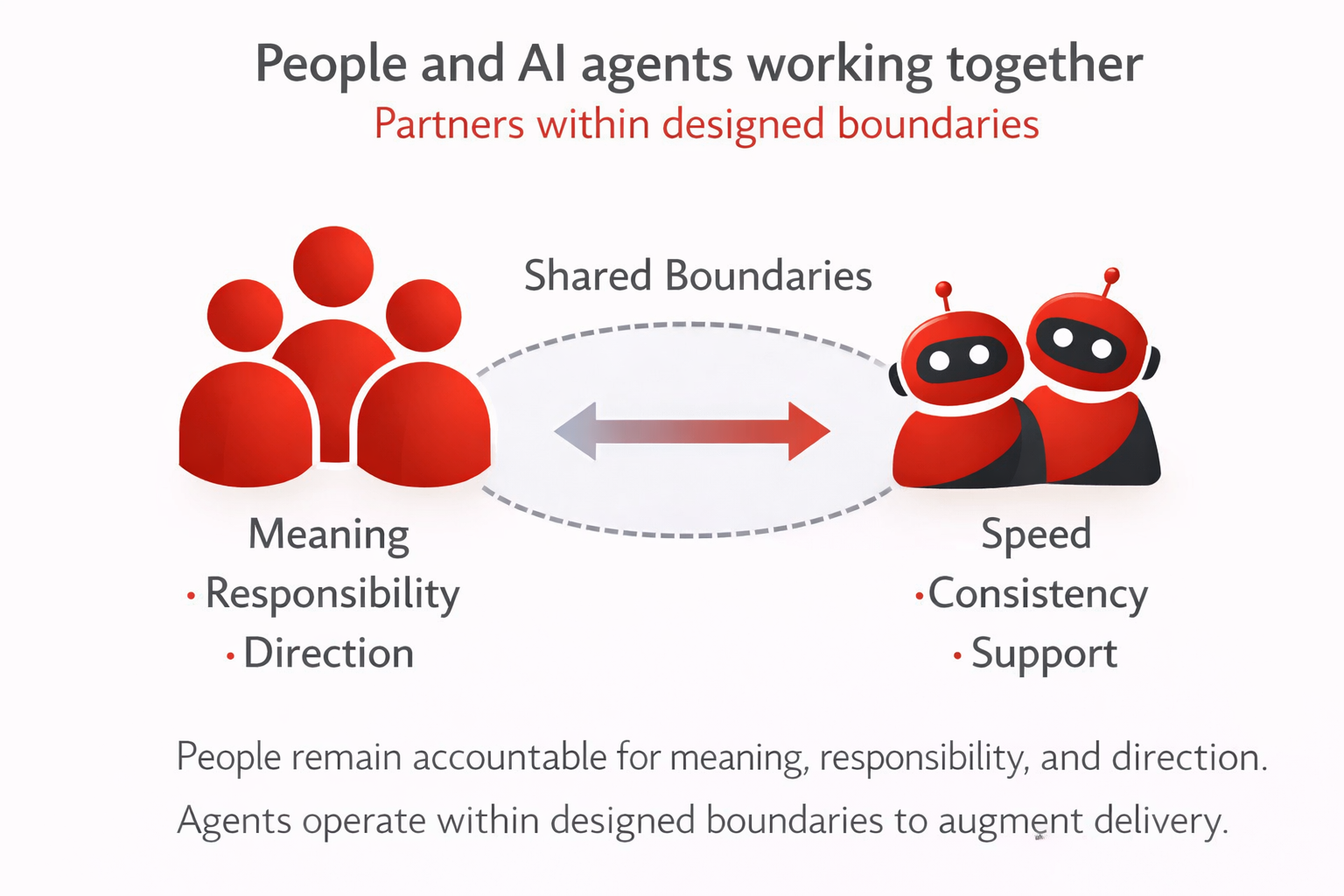

In practice, organisations succeed with AI when people and agents work together, each focused on what they do best. People bring meaning, judgement, and accountability. Agents bring speed, consistency, and the ability to scale well-defined work.

The Agency of Agents model is built on this partnership. It does not treat AI as a substitute for people, nor as a separate technical layer. Instead, it defines a deliberate way for humans and agents to collaborate — where responsibility remains human, capability is extended through agents, and value is created through real delivery rather than experimentation alone.

This page explains how that partnership works in practice, and why clarity of roles, boundaries, and accountability is essential to creating value in harmony over time.

Humans remain the source of meaning

People bring something to delivery that AI cannot. Humans define why work matters, who it serves, and what good looks like in context. They are accountable for outcomes, responsible for decisions, and able to adapt when conditions change in unexpected ways.

This is not about hierarchy or control. It is about meaning, responsibility, and direction. In the Agency of Agents model, people remain the owners of outcomes, the stewards of intent, and the ones who carry responsibility when trade-offs must be made.

Agents extend capability, not headcount

AI agents are treated as Full-Time Agents (FTAs) — not as people, and not as replacements for people. An FTA represents a specialised capability that can be applied consistently, at scale, without fatigue. Agents excel where work is repeatable, structured, and well-bounded.

Agents do not bring intent, accountability, or context of their own. They bring speed, consistency, and execution support within the boundaries people define. This is why AI is introduced to support people, not backfill them.

FTE and FTA: a complementary model

The Agency of Agents model distinguishes clearly between FTE — Full-Time Employees and FTA — Full-Time Agents. This distinction matters because it prevents category errors. People own outcomes and accountability; agents provide defined capability within boundaries.

Value is created when these two operate in partnership, each doing what they are best suited for. People provide judgement, direction, and responsibility. Agents provide repeatable execution support and scale.

Partnership by design, value by outcome

Humans and agents are not layered on top of each other accidentally. The partnership is designed deliberately. Agents are introduced into workflows where they reduce effort, increase consistency, or support scale — while people remain in control of direction and accountability.

This is why ADAM exists: to ensure this partnership is intentional, governed, and evolves responsibly over time. The outcome is not “more AI”. The outcome is better delivery.

Why pilots fail and augmentation succeeds

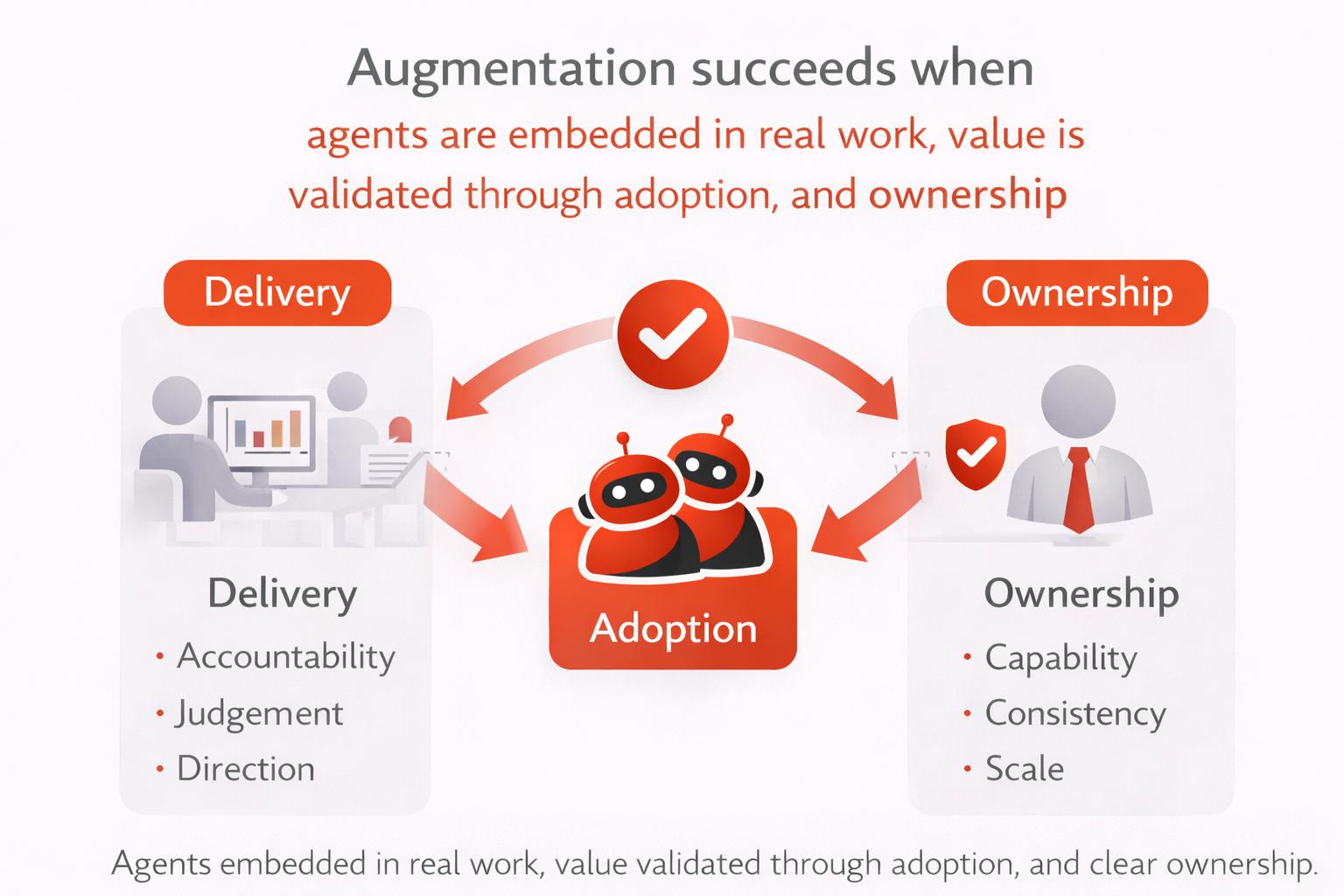

Many AI initiatives stall at the pilot stage because they treat AI as something separate from everyday work. Pilots often fail because ownership is unclear, value is not defined upfront, and success is measured by activity rather than adoption.

In contrast, augmentation succeeds when agents are embedded into real delivery, people understand what the agent does and does not do, value is validated through real use, and trust is built gradually through evidence. The Agency of Agents model is explicitly designed to avoid pilot purgatory.

Trust is built, not assumed

AI is never trusted implicitly. Trust is earned through adoption, validation, oversight, and reversibility. People remain responsible for results, even when agents contribute significantly. This creates confidence rather than fear, because teams understand how decisions are made and how agents can be adjusted or removed if needed.

Working together, over time

As teams become more comfortable working with agents, the nature of the partnership evolves. Agents may take on more responsibility within defined boundaries. Governance may become lighter as confidence grows. Teams may reuse agents across contexts — deliberately and with care.

At every stage, people remain accountable, agents remain bounded, and value remains the measure of success. This is how humans and agents evolve together — creating value in harmony over time.

In summary

The Agency of Agents model is not about replacing people. It is about freeing people to focus on what only humans can do, while agents handle what they are best suited for. People and AI working together. Creating value in harmony. Over time.