ADAM — Layer 2: Governance

How trust, autonomy, and risk are managed in real use

Layer 1 governs the moment AI enters delivery, but Layer 2 governs what happens after people start relying on it. This is where early enthusiasm meets real-world conditions, and where the organisation must decide how to keep progress safe without slowing it down.

The problem Layer 2 solves is not capability, but sustained trust as conditions change. AI is reused in new contexts. Outputs can drift in quality. Boundaries can blur over time. Usage often expands faster than controls unless that expansion is deliberate. Layer 2 exists to stabilise that motion so teams can continue to move forward with confidence.

Governance here is not a brake. It is stabilisation. It is how momentum becomes safe and repeatable. The operating stance is clear: AI is never trusted implicitly. Trust-but-verify is not a slogan; it is the everyday discipline that keeps autonomy aligned with evidence.

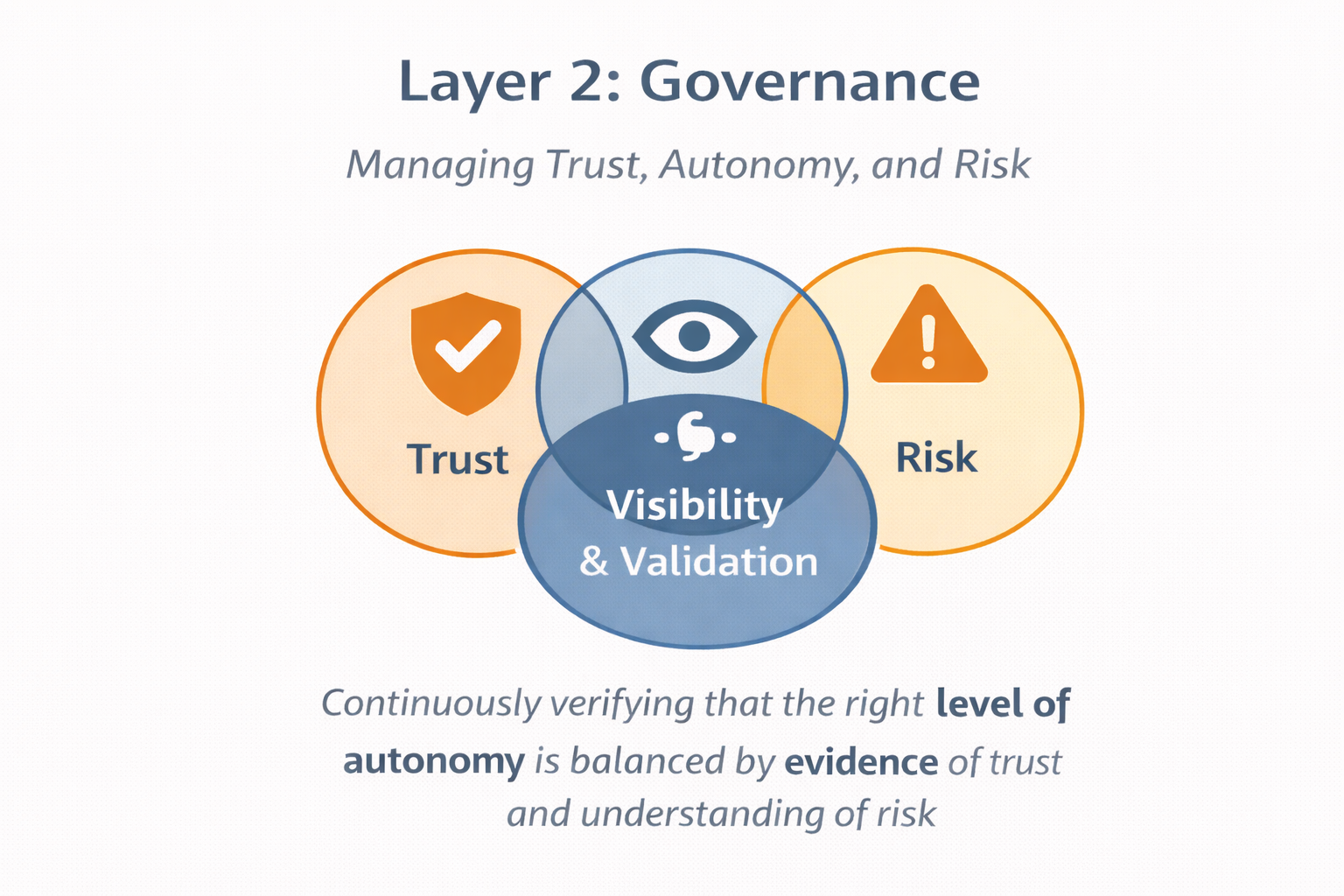

Governance in ADAM is control without constraint — always with consent — implemented through visibility, validation, and reversible autonomy.

What “governance” means in Layer 2

Many people hear “governance” and expect bureaucracy. That expectation comes from past experience: governance has often meant long approval queues and decisions made far from delivery. Layer 2 is the opposite. It is a lightweight set of mechanisms that keep decisions explicit and reversible while work continues.

The difference is subtle but critical. An approval process tells you whether to proceed. Layer 2 makes progress visible while you proceed. It creates a shared understanding of why autonomy is increasing, what evidence supports that shift, and how it can be reduced if the context changes. Governance, in this sense, is how you move faster safely.

Governance fails when it is a single central gate. Teams learn to work around it, and the organisation loses visibility instead of gaining it. Layer 2 avoids that by distributing decision rights while keeping autonomy changes explicit and reversible.

Trust is not a feeling — it’s evidence

Trust in Layer 2 is an operational concept. It is confidence based on observed behaviour and outcomes, not optimism or reputation. Trust grows through usage, strengthens through validation, and can degrade when context changes. That is why the framework treats trust as something measurable rather than something assumed.

The signals are visible in day-to-day work: adoption consistency, correction frequency, incident patterns, and outcome improvement. These signals allow teams to discuss trust without opinion or politics. Layer 2 turns trust into something that can be observed, debated, and acted on.

Worked example: trust shifting with context

A compliance agent performs well in one market and gains strong adoption. A team expands it into another region where policy language differs. Outputs remain accurate, but the context has shifted. Layer 2 triggers re-validation before the agent is relied upon fully. The evidence is gathered quickly, trust is rebuilt in the new context, and the expansion proceeds without exposing the organisation to hidden risk.

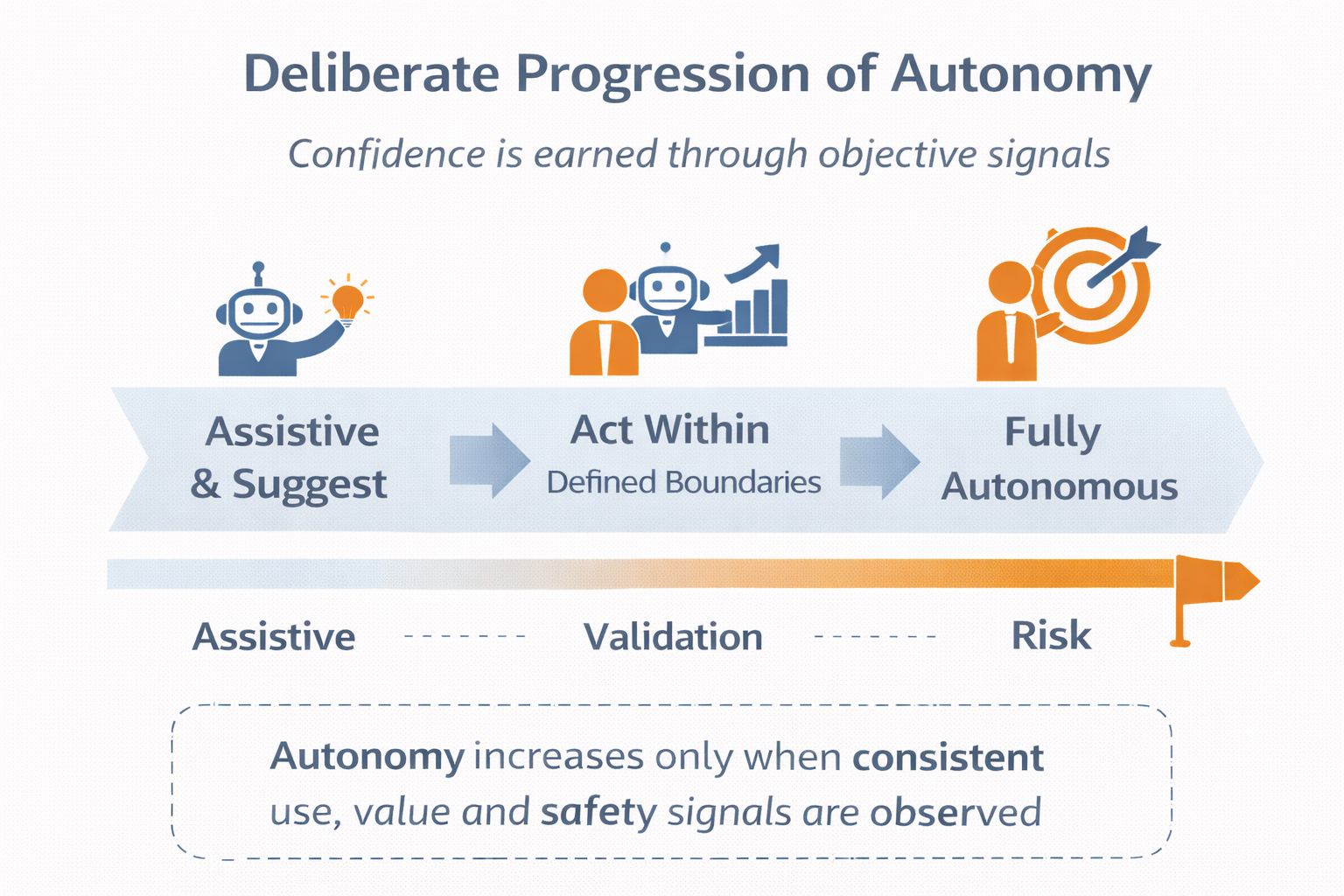

Autonomy is a managed variable (not a feature)

Autonomy is not binary. It is staged and deliberate. Most value comes from augmentation, not automation, and Layer 2 treats autonomy as a variable that can increase or decrease as evidence changes. The goal is not more autonomy, but the right autonomy.

Autonomy creep is dangerous because it happens by convenience rather than decision. A team trusts an agent a little more, removes a check, and suddenly a boundary has shifted. Layer 2 makes those shifts explicit. It uses adoption signals, validation outcomes, and risk understanding to determine when and how autonomy changes — and to ensure those changes are reversible.

Worked example: when not to increase autonomy

A drafting agent begins in an assistive mode, suggesting copy for a comms team. Adoption is strong, so it becomes coordinated, generating draft variants that humans choose from. However, reputational risk remains high and small errors are costly. Layer 2 keeps the agent non-autonomous despite the performance gains. The decision is explicit: the value is real, but the autonomy level remains bounded because the risk profile has not changed.

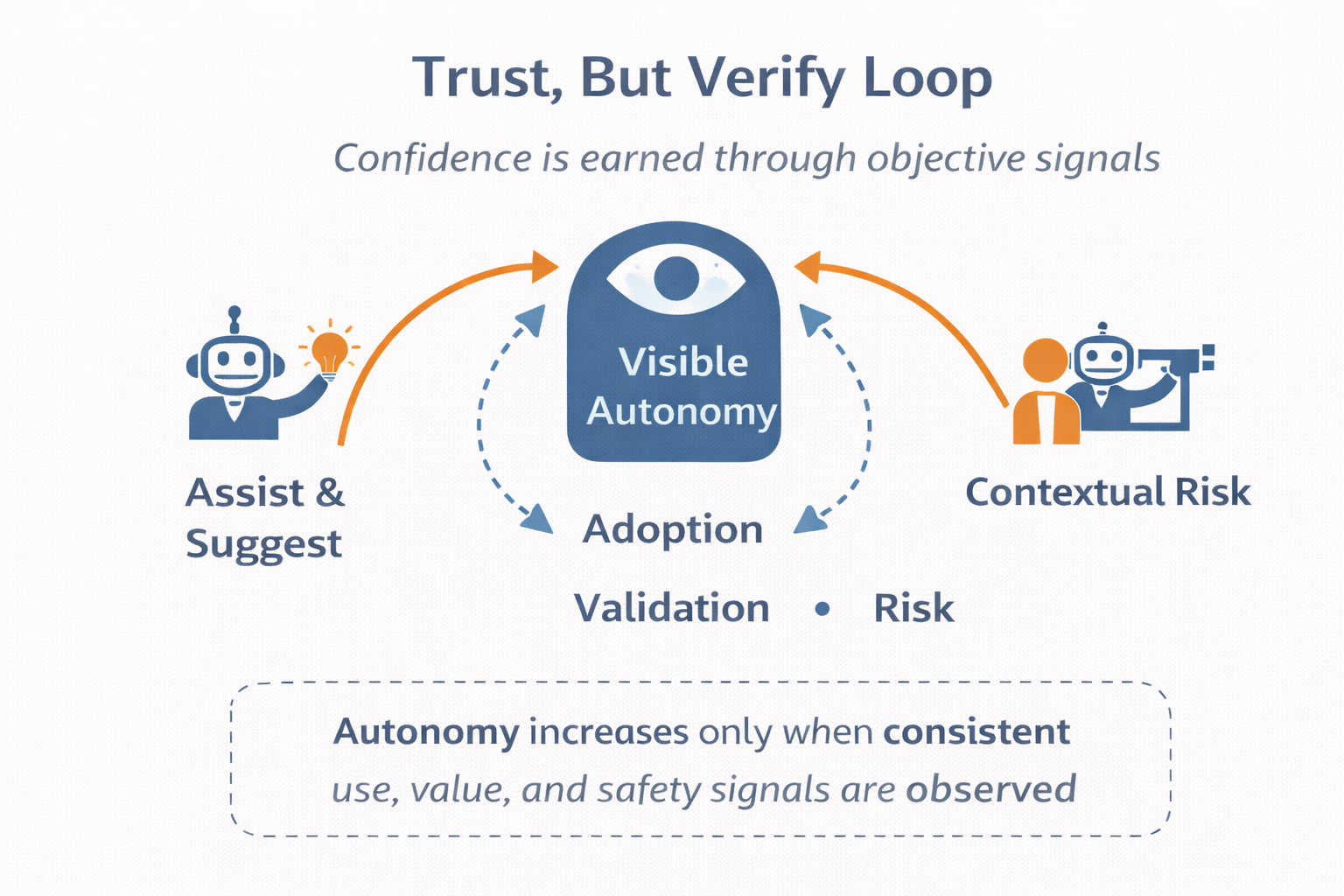

Trust-but-verify as an operating loop

Trust-but-verify is not a one-off review. It is a loop that runs alongside delivery. Validation must be continuous because AI performance can change with data, usage patterns, model updates, and context shifts. Verification is not only about accuracy; it includes ensuring boundaries are respected and outcomes remain aligned with intended value.

Worked example: adoption with mixed validation

An insights agent is widely adopted across a team because it saves time. Validation shows occasional hallucinations that could mislead decisions. Layer 2 does not remove the agent; it constrains autonomy, introduces review checkpoints, and continues measuring outcomes. Adoption remains, but trust is recalibrated through evidence rather than assumption.

Risk is contextual, not theoretical

Risk does not live inside the agent alone. It emerges from the interaction between the agent, the task, the data, and the environment. The same agent can be low-risk in one context and high-risk in another. Reputation, compliance exposure, privacy constraints, and operational dependence change with the situation, not just with the technology.

Layer 2 handles risk by adjusting autonomy and controls proportionately. The aim is not to ban progress, but to align progress with the right safeguards. Overreacting to risk creates shadow AI, where teams bypass governance entirely. Underreacting creates silent fragility. Layer 2 exists to keep risk visible and decisionable.

Reversibility is a design requirement

Reversibility is what makes autonomy safe. If you cannot reduce autonomy cleanly, you should not increase it. Layer 2 treats reversibility as an engineering feature, not a failure mechanism. It requires a practical plan for how autonomy is reduced and who decides when that reduction is necessary.

This matters because governance is about sustainable progress. The ability to step back without drama builds confidence for moving forward. It tells teams that autonomy is always conditional on evidence, not on hope.

Worked example: rollback as confidence

A coordinated agent is allowed to take bounded actions in a workflow. A rollback condition is defined in advance: if error rates rise or policy changes, autonomy is reduced to assistive mode. When the trigger is met, the rollback occurs cleanly, and the team continues to benefit from the agent without exposure. Rather than undermining trust, the rollback reinforces it because everyone understands that progress is reversible.

Roles in Layer 2

The Accountable FTE remains central in Layer 2. This person consents to autonomy changes and owns outcomes, even as agents become more capable. The ADAM Governance Steward ensures decisions are documented, visible, and reversible so that autonomy changes are never accidental. Risk and compliance partners advise on boundaries; they are inputs to decision-making, not gatekeepers.

The purpose is clarity of decision rights: who can decide, who must be consulted, and who must be informed. A governance review moment might include the Delivery Owner presenting validation evidence, the Steward confirming reversibility, and a risk partner highlighting any boundary shifts. The decision is then made openly, with ownership visible.

What good looks like

When Layer 2 works, teams feel safe adopting agents because boundaries are clear. Leaders feel confident because autonomy is visible and verified. Risk feels proportionate rather than blocking. Autonomy changes are deliberate, documented, and reversible. Trust grows at the same pace as capability.

How Layer 2 connects to Layer 1 and Layer 3

Layer 1 sets the baseline clarity. Layer 2 keeps trust stable while AI is in real use. Layer 3 governs maturity and makes governance lighter as confidence increases. Skipping Layer 2 forces a false choice: freeze autonomy or let it expand unchecked. Layer 2 avoids that by keeping autonomy explicit, evidence-based, and reversible.