Decision Framework

Deciding when and how to use AI responsibly

In the Agency of Agents model, the most important question is not “Can we use AI?” It is: “Should we use AI here — and if so, how?”

A calm, deliberate approach to value creation, adoption, and governance.

Why a decision framework matters

Without a clear decision framework, organisations risk automating the wrong work, introducing AI where trust does not yet exist, creating complexity faster than value, and losing clarity over accountability.

- Automating the wrong work

- Introducing AI where trust does not yet exist

- Creating complexity faster than value

- Losing clarity over accountability

The Decision Framework exists to ensure that AI is applied deliberately, appropriately, and in service of value creation.

Three principle-led approach

- Augmentation before automation — AI is introduced to support people, not backfill them.

- Adoption before autonomy — Capability must be used and trusted before it is expanded.

- Validation before scale — Value, trust, and control must be demonstrated in practice.

Decision Framework at a glance

The framework uses a small number of deliberate questions to guide decisions. It is designed to be repeatable, explainable, and revisited over time.

Starting point: human accountability

Human accountability is always explicit.

- Who is accountable for the outcome?

- What decisions remain human?

- What success looks like?

If accountability cannot be clearly defined, the use of AI should be reconsidered.

The decision questions

The framework uses four deliberate questions to guide AI decisions. Each step is designed to feel practical: start with suitability, define a quantum of value, choose an appropriate level of autonomy, and confirm risks and constraints.

-

01

Is the work suitable for augmentation?

Not all work benefits from AI. The best candidates are tasks where structure and repeatability exist, and where introducing an agent reduces effort or increases consistency without weakening accountability. The decision here is less about capability and more about whether augmentation improves delivery in a way teams will actually adopt.

Strong fit signals

Work is repeatable, structured, and defined well enough for an agent to contribute consistently.

Inputs and outcomes can be described clearly and verified in practice.

People remain accountable for results and decisions.

Proceed carefully when…

Decisions are highly ambiguous or context shifts constantly.

Trust is fragile or unproven, or the environment is high-stakes.

Accountability cannot be clearly defined.

-

02

Is the value clear and measurable?

Before introducing an agent, value must be expressed as a discrete, testable unit. In the Agency of Agents model, we describe this as a quantum of value — borrowing from physics, where a quantum represents the smallest meaningful unit. A quantum of value is a clear improvement that can be observed through adoption and measured through outcomes, not activity.

Quantum of value: the smallest useful unit of improvement that can be validated in real work — through adoption, outcomes, and evidence.

Define value before you build

- Expected quantum of value: what will improve in a meaningful way?

- Validation method: how will we prove it in real use?

- Adoption signal: how will we know it is being used?

If value cannot be validated through real-world usage, the agent should not progress.

-

03

What level of autonomy is appropriate?

Autonomy is not binary. Most value is created through augmentation, where people lead and agents assist. Increased autonomy is applied selectively, informed by adoption, validation, and risk. The aim is to expand capability without expanding uncertainty.

AssistiveSupports individuals and teams without acting independently.

CoordinatedManages structured tasks within defined boundaries.

Supervised autonomyOperates with limited autonomy under explicit governance and verification.

Autonomy expands only when adoption is strong, validation is positive, and risk is controlled.

Autonomy is deliberate, reversible, and never assumed.

-

04

What are the risks and constraints?

Every decision must account for risk. The goal is not to eliminate risk entirely, but to make it visible, bounded, and manageable. If an agent cannot be paused, adjusted, or removed safely, then the design is incomplete.

Risk considerations

- Reputational exposure

- Regulatory or compliance boundaries

- Data sensitivity and privacy

- Operational dependence

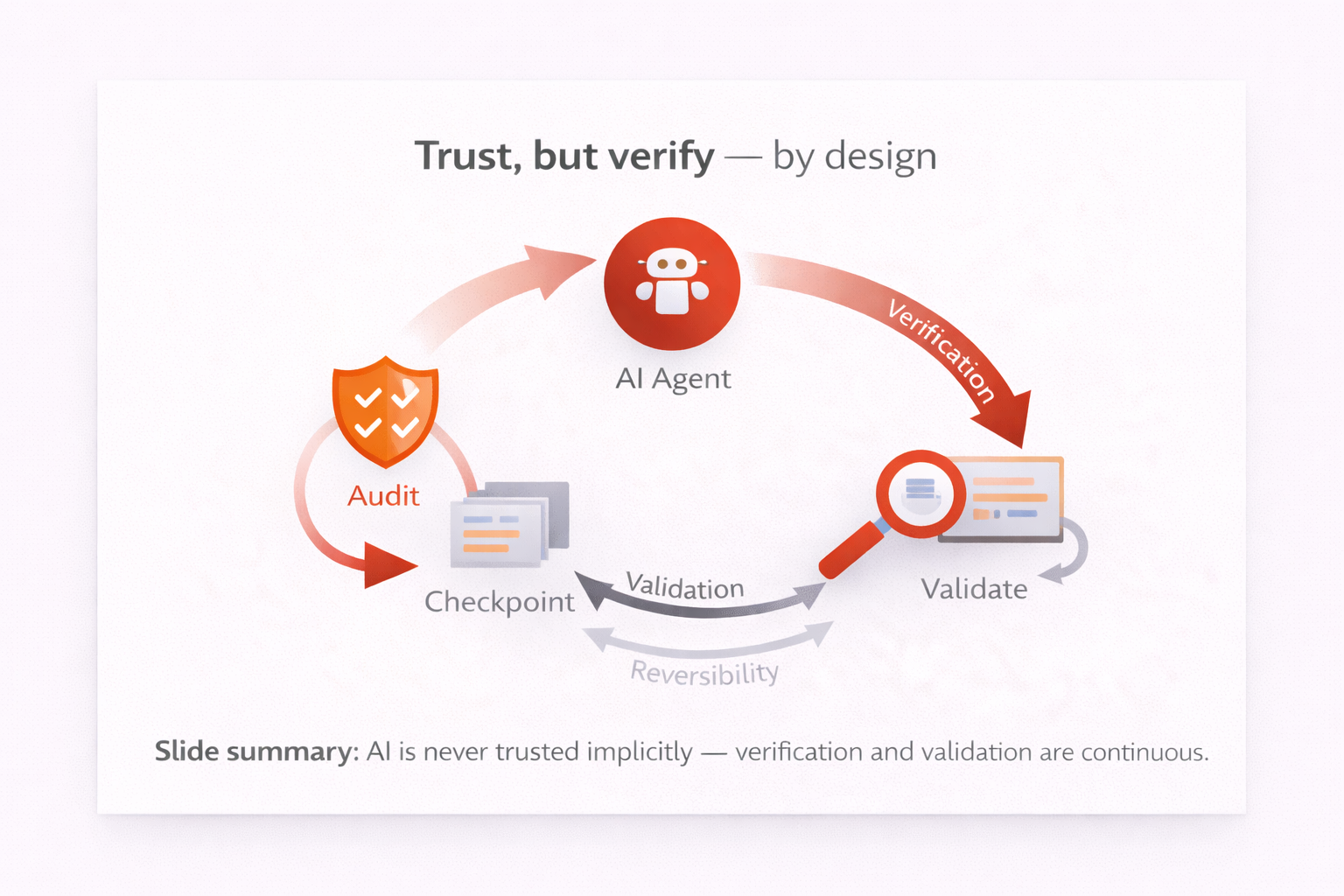

Non-negotiable control

The ability to pause, adjust, or remove an agent is a requirement — not an afterthought. Reversibility is a design feature.

FTE and FTA decisions

Full-Time Employees (FTEs)

Accountable for outcomes, decisions, intent, and direction.

Full-Time Agents (FTAs)

Augment capability by reducing effort, increasing consistency, supporting scale, and enabling structured decision frameworks to be applied consistently.

- FTE-led work is the default

- FTAs support defined activities

- Full autonomy is applied selectively

Trust, but verify — by design

AI operates on a trust-but-verify basis and is never trusted implicitly. Verification and validation are continuous, not one-time events.

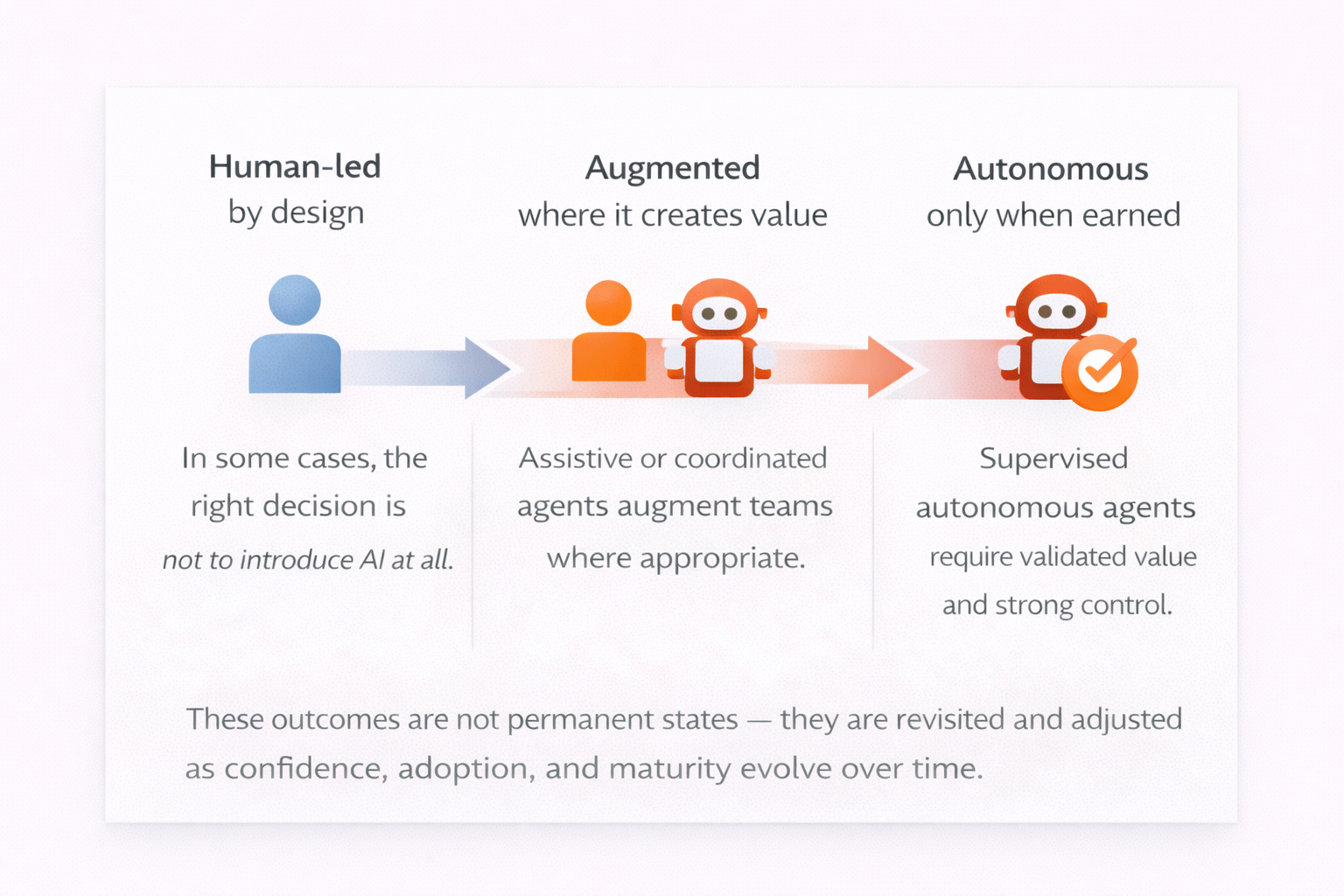

Decision outcomes

Decisions lead to one of three outcome patterns. These are designed to be clear, explainable, and revisited over time.

Human-led by design

In some cases, the right decision is not to introduce AI at all. Where work is highly contextual, non-repeatable, or dependent on human nuance, human-led delivery remains the most effective and responsible approach. Choosing not to use AI is a valid and intentional outcome of the Decision Framework.

Augmented where it creates value

Where AI can reduce effort, improve consistency, or support scale, assistive or coordinated agents may be introduced to augment teams. In these cases, people remain accountable, while agents operate within clearly defined boundaries — supporting delivery without acting independently.

Autonomous only when earned

In limited and well-understood contexts, supervised autonomous agents may be appropriate. This occurs only where adoption is strong, value has been validated, risks are controlled, and reversibility is assured. Autonomy is never assumed and is always subject to ongoing verification.

Decisions evolve over time

As adoption increases and confidence grows, agents may evolve, autonomy may increase or decrease, and approaches may change.

The Decision Framework is designed to support continuous reassessment, not fixed conclusions.

Governed through ADAM

All decisions within the framework are governed through ADAM — the AI Delivery and Management framework.

- decisions are documented

- validation is performed consistently

- autonomy is managed deliberately

- evolution remains controlled

In summary

The Decision Framework is designed to ensure that AI is applied with intent, clarity, and discipline. It recognises that not all work benefits from AI, and that value is created not through automation for its own sake, but through deliberate augmentation of human capability. Decisions are grounded in adoption and validation, with autonomy increasing only where confidence, evidence, and control are established.

Throughout, accountability remains human. AI is introduced to support people, not backfill them, and always operates on a trust-but-verify basis — never trusted implicitly. As teams mature and confidence grows, decisions are revisited and refined, ensuring the approach remains appropriate, responsible, and value-driven over time.

This means that in practice:

- Not all work should use AI

- Augmentation comes before automation

- Adoption and validation guide autonomy

- Accountability remains human

- AI operates on a trust-but-verify basis and is never trusted implicitly

- Decisions are revisited as maturity grows